metadata

license: apache-2.0

This repo provides the model weights released in the paper Towards Neural Scaling Laws for Time Series Foundation Models.

The models have varying sizes, ranging from 1M to 1B parameters, and were trained on datasets spanning from 10M to 16B time points.

Code: https://github.com/Qingrenn/TSFM-ScalingLaws

Dataset: https://huggingface.co/datasets/Qingren/TSFMScalingLaws

Figure1: Scaling laws for NLL in relation to model size, compute, and dataset size. The blue lines represent ID performance, while the red and green lines show OOD performance on LSF subset and Monash subset.

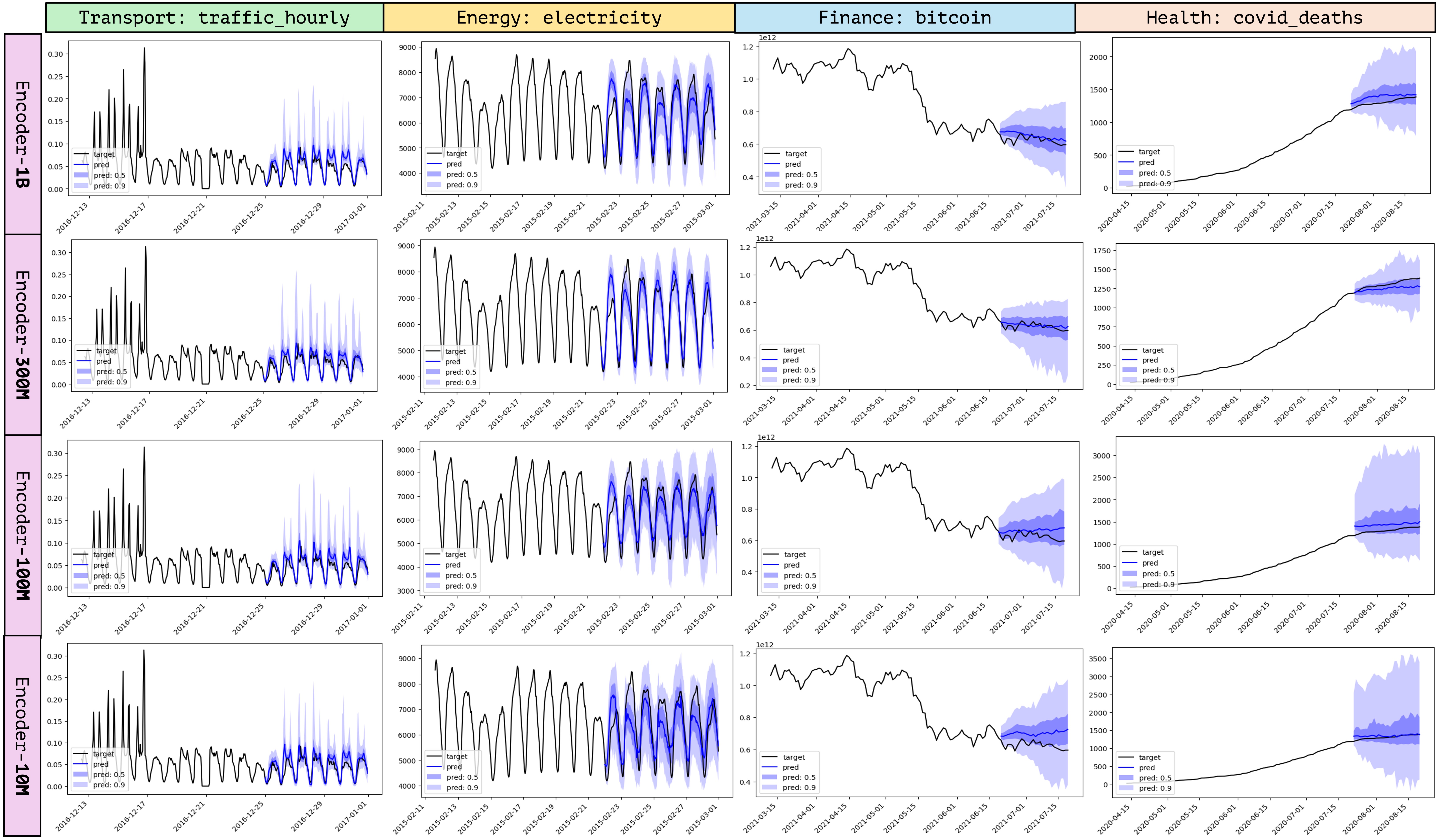

Figure2: Prediction results of models with sizes 1B, 300M, 100M, and 10M.